Buddai: Personal Assistant AI Platform

The Buddai project is a full-stack, agent-centric productivity platform built to experiment with Model Context Protocol (MCP) services, personal knowledge bases, and conversational user interfaces. It combines a modern React web application, an extensible Node.js back end, and a catalogue of specialised MCP servers that orchestrate intelligent assistants capable of operating across calendars, notes, messaging channels, maps, and external APIs. This README acts as a comprehensive guide to the platform: the vision, the technical architecture, the development workflow, and the roadmap for iterative experimentation.

What to expect in this document

- A long-form narrative (roughly ten pages) summarising the motivation for Buddai, the system architecture, and the operational model.

- A pragmatic getting-started sequence covering local development, Docker usage, and Dev Containers.

- Clear entry points into the detailed documentation that lives in the

/docssite and each subproject README.- Guidance for extending Buddai with new agents, new MCP tools, or alternative front ends.

Table of Contents

- Why Buddai Exists

- Product Vision and Guiding Objectives

- High-Level Architecture

- Reference Platform Diagram

- Core Components and Responsibilities

- Platform Workflows and Data Lifecycles

- Getting Started: Cloning, Installing, and Bootstrapping

- Development Environments

- Running the Web Application

- Running the Web Server

- Running and Extending MCP Servers

- Agents, Prompts, and Tooling Customisation

- Knowledge Management and Semantic Search

- Observability, Reliability, and Operations

- Documentation, Readmes, and Further Reading

- Roadmap and Future Work

- Contributing and Collaboration Model

- Licensing and Contact

Why Buddai Exists

Modern professionals and students rely on a sprawling ecosystem of digital tools to manage schedules, notes, communications, and long-form knowledge. Task managers, calendar suites, document editors, messaging platforms, and AI copilots often coexist without truly cooperating. The burden of stitching these workflows together falls on the user, who must remember where information lives and how to transfer it between systems. Buddai was born from the desire to invert that burden: create a modular assistant that adapts to the user, not the other way around.

The project positions conversational AI as the connective tissue between disparate productivity domains. Instead of bolting generative AI onto existing tools as an afterthought, Buddai treats agentic behaviour as the foundation. Each agent is a programmable orchestrator equipped with tools (MCP servers) that encapsulate domain-specific capabilities such as reading calendars, generating embeddings, or fetching weather data. By designing from first principles around MCP, Buddai explores how a personal assistant can offer deeply integrated, privacy-preserving automation while remaining hackable and self-hostable.

Another core motivation is portability. Many AI-first productivity suites demand that users upload their private data to third-party clouds. Buddai deliberately emphasises local-first and hybrid deployments where sensitive information stays under the user's control. MCP servers may run on the same machine, inside containers, or on separate hosts. The web application can be deployed as a static bundle, and the back-end provides a narrow API surface with explicit authentication flows. Together, these traits make Buddai a living laboratory for balancing privacy, cost, and functionality.

Product Vision and Guiding Objectives

The vision for Buddai is to deliver a personal knowledge steward: a system that remembers context, reasons over time, and acts on behalf of the user through a curated set of tools. The following objectives anchor design decisions:

- Modularity at every layer. Agents can be added, updated, or retired without redeploying the entire stack. MCP servers communicate over a standard protocol, so the supervisor can evolve independently from the web UI.

- Extensibility through configuration. YAML-based agent manifests, declarative tool registrations, and TypeScript service factories encourage experimentation without deep rewrites.

- Hybrid intelligence. Local models handle sensitive tasks (e.g., embedding private notes), while API-based large models manage reasoning-heavy workflows. The system respects user preferences for privacy versus capability.

- Transparent operations. Logs, MongoDB-backed state (for Agenda jobs and location), and well-structured routers make it clear how actions propagate through the platform.

- Developer ergonomics. A Dev Container and docker-compose recipes ensure that contributors can reproduce environments quickly. Scripts like

npm run docs:syncguarantee that documentation stays synced with code. - Long-term maintainability. By embracing a monorepo with workspaces (

web_app,web_server) and isolating MCP servers under their own folder, Buddai remains approachable even as components multiply.

These objectives echo the academic motivations captured in the broader thesis work: reclaim personal knowledge management from fragmented tooling, leverage the latest AI frameworks responsibly, and craft a solution that can continue to evolve after the initial research project concludes.

High-Level Architecture

At its core, Buddai is organised into three cooperative layers:

- Client Experience (Web App). A React 18 single-page application rendered via Vite. It presents login flows, agent dashboards, markdown-based editors, and real-time chats. The UI is optimised for desktop usage with dark-mode support, responsive layouts, and Shadcn/Tailwind components.

- Orchestration Back End (Web Server). An Express application that exposes RESTful endpoints for authentication, agent CRUD, configuration, tool registries, and messaging bridges. It also hosts service adapters for WhatsApp (Baileys), Telegram, Agenda/MongoDB scheduling, and the MCP supervisor runtime.

- Tooling Ecosystem (MCP Servers). A collection of specialised services implementing the Model Context Protocol. Each server encapsulates a domain: calendars, notes, maps, weather, semantic scraping, time utilities, etc. They register tools that agents can invoke via the supervisor.

Supporting these layers are infrastructure components: MongoDB for persistent storage (agenda, reminders, user location), optional Redis for caching, local ONNX models for embeddings, and Docker for packaging. The architecture is intentionally service-oriented, allowing each MCP server to scale independently or run only when needed.

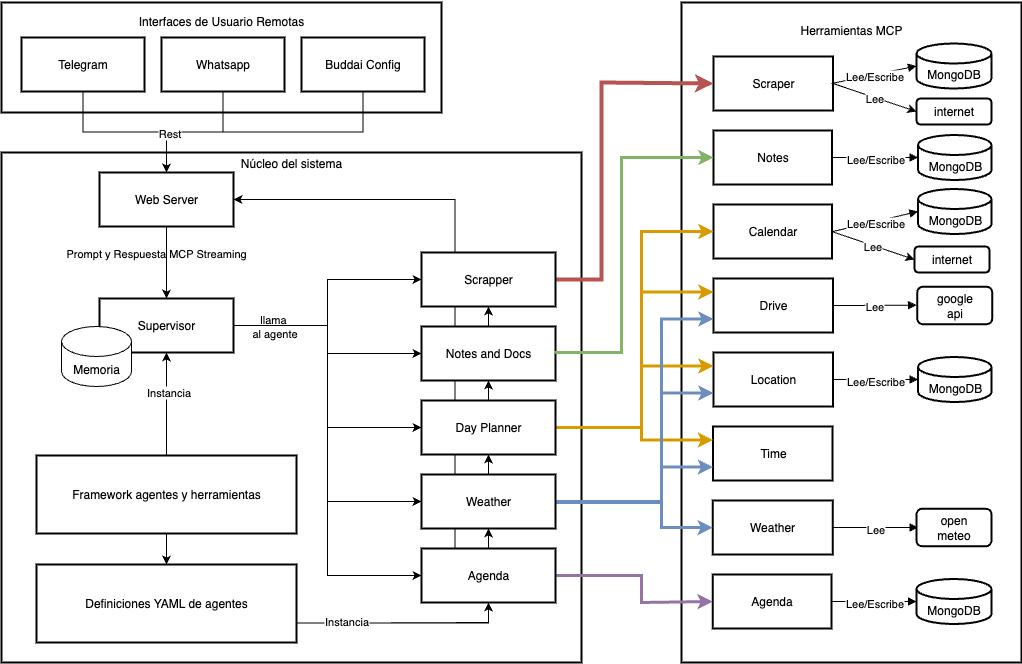

Reference Platform Diagram

The following diagram illustrates how the subsystems interact. The image lives alongside the documentation assets so it can be iterated without rewriting this README.

Key flows highlighted in the diagram:

- The web app authenticates against the web server, which validates credentials via MongoDB and issues encrypted JWTs.

- Authenticated requests fetch agent definitions, tool registries, and environment data from the web server.

- When an agent workflow runs, the supervisor coordinates MCP tool calls, fusing responses from services such as Notes (semantic search), Calendar (event sync), Drive (routing), or Weather (forecasts).

- External messaging (WhatsApp/Telegram) routes through the back end, delegating natural-language understanding to the same agentic pipelines for consistency.

Core Components and Responsibilities

Web Application (web_app/)

The SPA is the human-facing cockpit for Buddai. Core views include:

- Login with light/dark theming, mock credential hints for demos, and environment configuration.

- Agent roster with creation flows, quick filters, and contextual actions (archive, clone, delete).

- Agent editor featuring markdown prompt editing, temperature sliders, model selectors, and tool assignment checklists.

- Chat interface that supports system messages, streaming, error banners, and conversation history browsing.

- Settings panels for MCP registry inspection, environment variables, and backend URL overrides.

Technical highlights:

- Built with Vite + React 18 + TailwindCSS + Shadcn UI.

src/services/api.tsxdynamically chooses between mock and real API clients based onVITE_USE_MOCKS.- Local storage holds mock mode state (

buddai_agents,buddai_settings, etc.) to enable offline demos. - The Markdown editor uses debounced updates and AI-assisted prompt tweaks for rapid iteration.

For deeper exploration, read web_app/ and browse the generated docs at docs/web_app/index.md.

Web Server (web_server/)

The back end hosts the API consumed by the SPA and acts as the bridge between HTTP clients, MCP toolchains, and messaging adapters. Responsibilities include:

- Authentication via MongoDB credential checks and AES-encrypted JWT issuance.

- Agent metadata loading from

agents/*.agent.ymland exposing CRUD endpoints for the UI. - Model & tool registry surfacing what MCP services are available, their configuration, and health.

- Environment variable management for quick prototyping (in-memory updates with secure masking).

- Messaging adapters for WhatsApp (Baileys QR pairings) and Telegram bots.

- Agenda/Calendar coordination to schedule reminders and fan out sync jobs to MCP servers.

Key modules: src/services/agendaService, src/services/supervisorService, src/routes/web, src/routes/telegramController. See web_server/ for endpoint matrices, environment variables, and deployment notes.

MCP Servers (mcp_servers/)

Each MCP server is self-contained. Highlights:

- Supervisor (FastAPI + FastMCP) orchestrates LangGraph agents, mediates tool invocation, and exposes

run_supervisor. - Notes (Node.js) manages markdown knowledge, performs embedding with local ONNX (

nomic-embed-text-v1.5), and supports semantic retrieval. - CalendarTool synchronises Apple/iCloud calendars, indexing events for search and creation.

- Agenda uses the

agendaNode library backed by MongoDB to manage scheduled tasks. - Scraper pulls structured data from websites, Instagram, and YouTube, generating embeddings for later recall.

- Drive (Python) wraps Google Maps APIs for routing and geocoding.

- Location retains the user's last-known position, enabling context-aware prompts.

- Time and Weather provide temporal and meteorological utilities.

Explore mcp_servers/ for a directory of services and build instructions. Individual server READMEs (e.g., mcp_servers/notes/) detail environment variables, tool signatures, and data formats.

Documentation Site (docs/)

Static documentation is generated via VitePress. The script npm run docs:sync converts all project READMEs into docs/**/index.md, ensuring they stay up to date. The site exposes navigation for the overview, web app, web server, and MCP catalog, mirroring the repository structure.

Platform Workflows and Data Lifecycles

Understanding how data moves through Buddai helps when debugging or extending the platform:

- User authentication. Credentials are submitted from the SPA to

/login. The web server validates them against MongoDB (or mock services) and returns an encrypted JWT. Subsequent requests include this token. - Agent selection. The SPA pulls

/agentsand displays metadata such as model, description, and assigned tools. Edits performed in the UI are sent back through POST/PUT endpoints, updating YAML manifests underagents/. - Conversation loop. When a user starts chatting, the SPA streams messages to the web server, which forwards them to the supervisor. The supervisor evaluates the conversation graph, decides which MCP tools to call, and aggregates results into a coherent response.

- Tool invocation. MCP servers receive structured tool calls (JSON payloads) and respond with domain data. For example, the Notes server might perform an embedding search, while the Calendar server might create an event.

- Result delivery. The supervisor returns a final response to the web server, optionally attaching command results or follow-up actions. The SPA renders these in the chat interface.

- Background jobs. Agenda-driven tasks or calendar syncs are initiated via cron-like definitions, enabling offline or scheduled actions that do not require user presence.

- External messaging. WhatsApp or Telegram events flow through dedicated controllers, which normalise incoming text and dispatch it to the supervisor just like web chat interactions.

This layered workflow decouples the user experience from tool implementations, making it possible to replace any MCP server with an alternative without refactoring the front end.

Getting Started: Cloning, Installing, and Bootstrapping

Repository structure

root

|--- web_app/ # React front end

|--- web_server/ # Express back end

|--- mcp_servers/ # Model Context Protocol services

|--- docs/ # VitePress documentation (generated)

|--- agents/ # Agent manifests and prompt assets

|--- scripts/ # Support scripts (sync docs, build helpers)Clone and initial setup

git clone https://github.com/your-org/buddai.git

cd buddaiNode dependencies

The root workspace defines npm workspaces for web_app and web_server. Install dependencies from the repository root to ensure hoisting works correctly:

npm installNote about

@whiskeysockets/libsignal-nodeThe WhatsApp integration depends on

baileys, which in turn fetches@whiskeysockets/libsignal-nodefrom GitHub. If the standard npm registry rejects the package (E404), configure npm to allow GitHub fetches:bashnpm config set "@whiskeysockets:registry" "https://npm.pkg.github.com" echo "//npm.pkg.github.com/:_authToken=YOUR_GITHUB_TOKEN" >> ~/.npmrc npm installAlternatively, install

libsignalmanually as a git dependency or comment out the WhatsApp adapter until credentials are available.

Python and system dependencies

Some MCP servers use Python (e.g., drive/, weather/). Ensure you have Python 3.11+ and pip available. Docker builds encapsulate most dependencies, but local development may require uv or pip-tools depending on the server.

MongoDB

MongoDB is required for Agenda jobs, login validation, and location storage. You can run a local instance or leverage the provided docker-compose.dev.yml to spin up MongoDB alongside other services.

Development Environments

Local machine

Run services directly from your host. Recommended when you need immediate iteration on TypeScript or Python files.

- Install Node.js 20+ and npm 10+.

- Install Python and other language toolchains required by MCP servers you plan to run.

- Start MongoDB locally or via Docker.

- Launch

npm run devinsideweb_app/and the relevant scripts in MCP directories.

Docker Compose

Build and isolate services using containers.

docker compose build # from repo root to build base services

docker compose up -d mongo # example: launch MongoDB onlyIn mcp_servers/, an additional compose file builds container images for each MCP service. These images can be published or orchestrated with a supervisor stack.

VS Code Dev Container

The repository ships with configuration for VS Code Dev Containers, enabling a consistent development environment without polluting your host system.

- Install Docker Desktop and VS Code.

- Install the Dev Containers extension.

- Open the repository in VS Code; when prompted, choose Reopen in Container.

- Wait for the container to build (includes Node, Python, and other dependencies).

- Start hacking. The dev container installs Python dependencies automatically after creation and on rebuilds. You can still run

pip install .manually inside the container when adjusting Python packages.

Rebuild the container via Dev Containers: Rebuild and Reopen in Container whenever you update Docker files or dependency lists.

Running the Web Application

From web_app/:

npm install # if not already installed from root

npm run dev # start Vite dev server (default mocks enabled)To target the real back end:

- Create

.env.development.localwithVITE_USE_MOCKS=false. - Ensure the web server is running and reachable.

- Restart the dev server.

Build production assets:

npm run buildThe dist/ folder can be served via static hosting platforms or integrated into a container image.

Running the Web Server

From web_server/:

npm install

npm run dev # start Express server with hot reload (ts-node/tsx depending on setup)Set environment variables (example .env):

PORT=8025

MONGO_HOST=localhost

JWT_SECRET=supersecret

JWT_EXPIRY=7d

AES_SECRET=local_aes_key

TELEGRAM_BOT_TOKEN=... # optionalUse DEBUG=web-server:* for verbose logging. Production deployments can leverage node index.js or process managers like PM2. Container builds rely on the included Dockerfile.

Running and Extending MCP Servers

Each directory under mcp_servers/ contains its own runtime. Typical patterns:

Node-based servers (e.g., notes/, calendarTool/, agenda/):

cd mcp_servers/notes

npm install

npm run devPython servers (e.g., drive/, weather/, time/):

cd mcp_servers/drive

python -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

python app.pyFor convenience, run docker compose build inside mcp_servers/ to build container images for every service. This is especially useful when deploying to remote hosts or testing integration.

Creating a new MCP server

- Scaffold a new folder under

mcp_servers/<service-name>/. - Define the tool manifest (

*.mcp.yml) describing available actions, input schema, and dependencies. - Implement the server in your language of choice, ensuring it speaks the MCP protocol (see the Model Context Protocol SDK for examples).

- Add a README detailing environment variables, commands, and sample tool calls.

- Register the tool with the supervisor and the relevant agent manifests.

The docs site (docs/mcp_servers/index.md) provides navigation into each server, and the supervisor README explains how to extend the LangGraph workflow when adding new tools.

Agents, Prompts, and Tooling Customisation

Agents live under the agents/ directory as YAML manifests. Each manifest typically includes:

- Metadata: name, description, default model, temperature.

- Prompting: system instructions, conversation starters, and fallback replies.

- Tool bindings: list of MCP tools the agent is authorised to call.

- Supervisor graph: optional overrides for the LangGraph workflow.

To create or modify an agent:

- Duplicate an existing manifest (e.g.,

agents/supervisor.agent.yml). - Update the metadata to describe the agent's purpose.

- Adjust the prompt text to align with new behaviours.

- Define which MCP tools are available; omit tools that are irrelevant or risky.

- Sync the docs (

npm run docs:sync) to regeneratedocs/agentspages if needed. - Reload the web app to view the agent in the management roster.

The web app's Markdown editor offers a friendly surface for editing prompts directly from the UI. Saved changes propagate to the file system via the web server API.

When introducing a new MCP tool, update the supervisor configuration so that agents know when and how to call it. The supervisor's graph can branch based on context, escalate to fallback models, or apply guardrails around tool usage. Because the supervisor is itself an MCP server, Buddai treats tool orchestration as just another service to operate and extend.

Knowledge Management and Semantic Search

Buddai approaches personal knowledge as a living corpus:

- Notes MCP ingests markdown documents, generates embeddings with local ONNX models (

nomic-embed-text-v1.5), and stores them for retrieval. The pipeline normalises text, handles chunking, and returns similarity scores. - Scraper MCP fetches external content (blogs, Instagram posts, YouTube transcripts), cleans the text, and indexes it for cross-referencing within conversations.

- Calendar Tool mirrors events from Apple/iCloud into MongoDB, making them searchable through the supervisor. Agents can create new events, update schedules, or cross-reference notes with upcoming meetings.

- Agenda Service schedules reminders and follow-up actions using the

agendaNode library, enabling timed messages or routines.

The combination of embeddings, retrieval-augmented generation, and domain-specific tools means agents can answer contextual questions like "What did I decide about the Q3 strategy during last week's meeting?" while also scheduling follow-up reminders or fetching directions to the meeting location.

Privacy remains central: users can opt to run embedding models locally, keep MCP servers on trusted hardware, and disable any tool that touches external APIs.

Observability, Reliability, and Operations

Maintaining Buddai in production (or even in a lab environment) benefits from layered observability:

- Logging: The back end uses the

debugpackage to emit namespaced logs (web-server:*). MCP servers log to stdout/stderr by default; container orchestrators (Docker, Kubernetes) can collect them centrally. - Health checks: Implement HTTP health endpoints for each MCP server to allow the supervisor to detect outages gracefully. Many services already expose simple status routes.

- Metrics: Consider integrating Prometheus exporters or OpenTelemetry instrumentation, especially for monitoring tool latency, success rates, and usage patterns.

- Tracing user actions: Tie conversation IDs between the SPA, web server, and supervisor to reconstruct end-to-end flows during debugging.

- Configuration management: Store secrets in environment variables or secret managers (Vault, Doppler). Avoid embedding credentials in agent manifests.

Reliability strategies include retrying MCP calls, queuing long-running tasks, and isolating optional services so the core assistant remains operational even if specialised tools go offline.

Documentation, Readmes, and Further Reading

Buddai maintains documentation on multiple layers. Use the following entry points:

- Docs site overview:

docs/index.md(generated from this README vianpm run docs:sync). - Web App details: web_app/ and

docs/web_app/index.md. - Web Server guide: web_server/ and

docs/web_server/index.md. - MCP catalog: mcp_servers/ and

docs/mcp_servers/index.md. - Individual MCP servers: browse

mcp_servers/<service>/for tool signatures and configuration tips. - Research artefacts: Internal thesis chapters, design notes, and methodological analysis that inspired Buddai are stored separately. Highlights cover implementation details, knowledge management rationale, observability considerations, and long-term ideas.

Synchronise documentation after editing README files:

npm run docs:sync

npm run docs:dev # preview the VitePress siteRoadmap and Future Work

Buddai is intentionally unfinished; it thrives on iterative experimentation. Active and aspirational initiatives include:

- Richer multimodal inputs. Expand agents to parse images, voice notes, and documents, possibly via MCP plugins for Whisper or local OCR pipelines.

- Fine-grained permissioning. Introduce role-based access for agents, separating personal and shared workspaces.

- Streaming supervisor responses. Adopt server-sent events or WebSockets to deliver partial responses and tool progress updates to the web app.

- Stateful chat memory. Persist conversation threads in a vector store to enable long-term context beyond immediate exchanges.

- Automated testing. Add Playwright suites for the web app and contract tests for MCP servers to ensure tool signatures remain stable.

- Deployment recipes. Provide Terraform/Ansible scripts for deploying the full stack to cloud environments while preserving privacy guarantees.

- Community contributions. Encourage new MCP servers (finance trackers, smart home bridges) and share them through the documentation site.

Refer to the internal thesis materials for academically motivated future lines of work.

Contributing and Collaboration Model

While Buddai started as a personal research project, it embraces collaborative development norms:

- Fork the repository and create feature branches scoped to specific changes.

- Keep documentation updated, especially when altering agent manifests, MCP interfaces, or environment variables.

- Run

npm run docs:syncbefore submitting pull requests so the docs site reflects the latest state. - Include tests or reproducible examples whenever possible; even simple smoke scripts help future maintainers.

- Coordinate major changes (new MCP servers, protocol shifts) via design discussions or RFC-style documents stored in

docs/or the private research archives.

Code style largely follows ESLint/Prettier defaults for JavaScript/TypeScript and Black/ruff for Python services. Tailor lints per service as needed.

Licensing and Contact

Buddai is an internal project. Redistribution and external use require permission from the maintainers. If you are collaborating on the research or wish to extend Buddai for academic work, reach out via the project communication channels documented in the private appendices.

For issues, ideas, or contributions:

- File GitHub issues within this repository.

- Document design proposals in the private research notes or new markdown files under

docs/. - Contact the project maintainer directly through the channels specified in private documentation.

Happy hacking, and welcome to the Buddai ecosystem.